- 更新:2023-08-24 13:56:53

- 首发:2023-08-23 23:21:29

- 人工智能

- 6270

Objectives of Supervised Fine-Tuning:

- Enhance Specific Task Performance : Aligning instructions with particular tasks.

- Domain Adaptation : Making the model compatible with specialized areas.

- Improve Interpretability and Controllability : Enhancing the model's ability to be understood and directed.

Overall, the goal is to improve robustness, which refers to the system's resilience.

Core Considerations:

- Diversity : To prevent overfitting, the data must be diverse. Diversity not only enhances generalization but also inference ability. It's not just about having many knowledge categories but also functional ones. The data volume for each category should be as balanced as possible; otherwise, imbalances may lead to oversensitivity to some and undersensitivity to others. Diversity can also be achieved by prompt template construction or data augmentation methods, like expanding translation instructions from Chinese to English.

- Avoid Mistaking SFT for Data Supplementation : SFT is not merely about adding more data; the model may remember some of it, but that's not the main purpose.

- Few-Shot and COT (Chain of Thought) Data Integration : Adding these into training can facilitate the model’s comprehension of instructions and multi-turn dialogue ability.

- Emphasis on Data Quality over Quantity in SFT : Typically, around 10,000 finely labeled data points can achieve good results.

- Quality over Quantity : Expanding data volume without enhancing diversity will significantly reduce benefits, while optimizing data quality will notably increase gains.

Data Quality Requirements:

- Length Constraints : Neither the question nor the answer should be overly long or short. Ideally, no more than 4k tokens.

- No Incorrect Answers : Only select high-quality data.

- Special Industry Requirements : For domains demanding high inference abilities, try to gather more CoT data.

- Diverse NLP Abilities Required : Including classification, structured output, creative writing, multi-turn dialogue, ancient Chinese translation, keyword recognition, reading comprehension, idiom explanation, text correction, sentiment analysis, entity recognition, programming, text matching, copywriting, song reviews, open questions, composition writing, storytelling, structured extraction, summarizing, closed questions, CoT, objective test questions, brainstorming, etc. (Avoid using only vertical domain data).

- Vertical Domain Data Proportions : Avoid too much; secondary pre-training (PT) could lead to better learning, and no vertical domain data might be added to SFT data.

Examples:

Good Dataset: Question: What's the name of the third child of Xiao Ming's mother, who has three children, with the first one named Yi Mao, and the second Er Mao? Answer: The question starts with "Xiao Ming's mother," so the third child is Xiao Ming, as per the premise.

Poor Dataset: Question: Same as above. Answer: Xiao Ming. (This direct answer lacks a thought process, emphasizing CoT)

Q & A

Why include coding ability in SFT? Teaching AI to write code is a way to instruct it to dissect problems and assemble solutions, which greatly enhances reasoning and structured output capabilities. Research supports this, including the increase in translation ability, which also boosts AI's problem-solving skills, along with other seemingly unrelated abilities.

为什么我不建议在不做PT的情况下做SFT?

如果不为了二次预训练,目前大部分模型都提供了Chat版本,直接用就好。SFT对于数据质量要求很高,在数据质量不高的情况下通过Base去做SFT容易反向优化。提升数据质量所消耗的成本也不低。

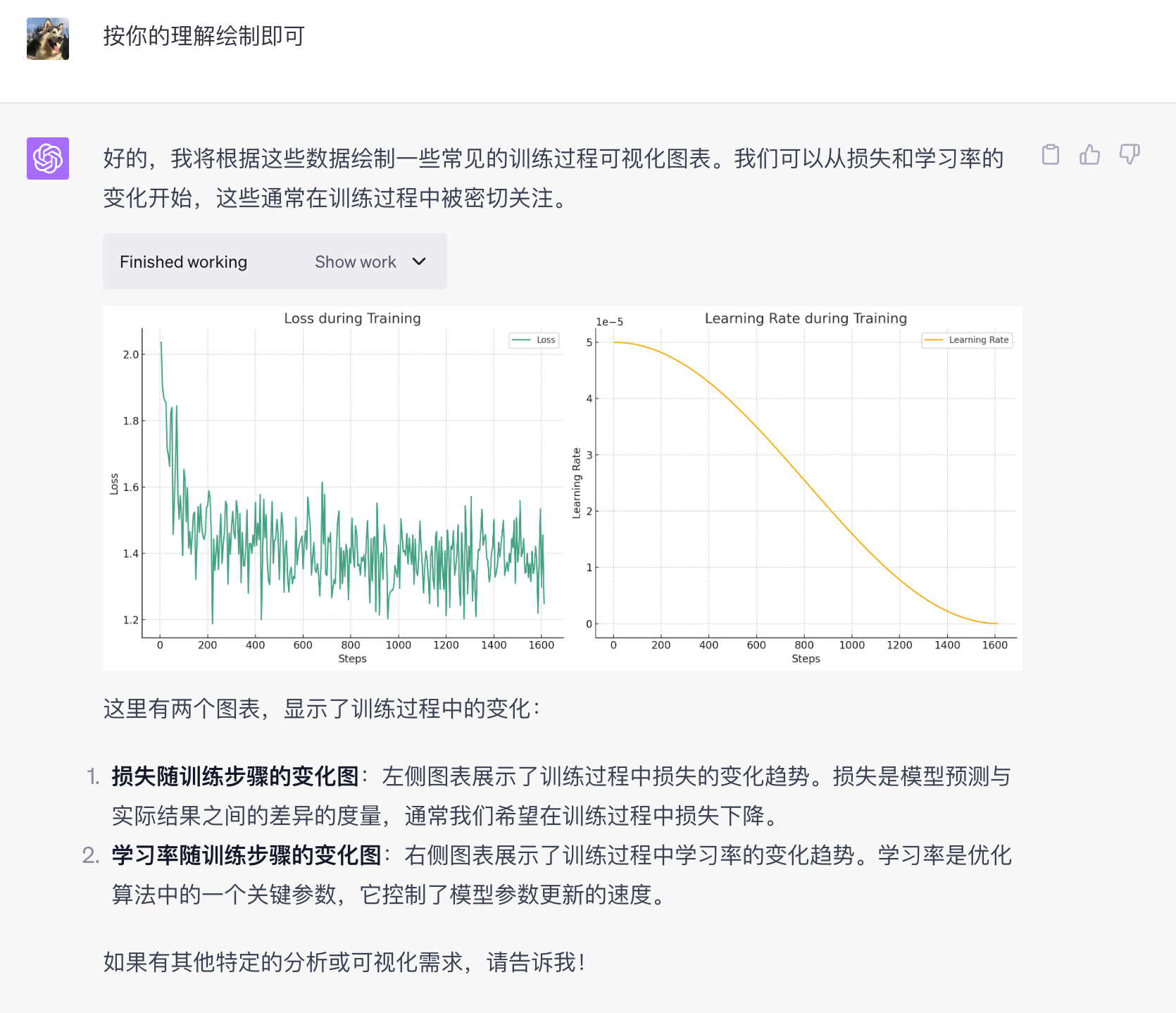

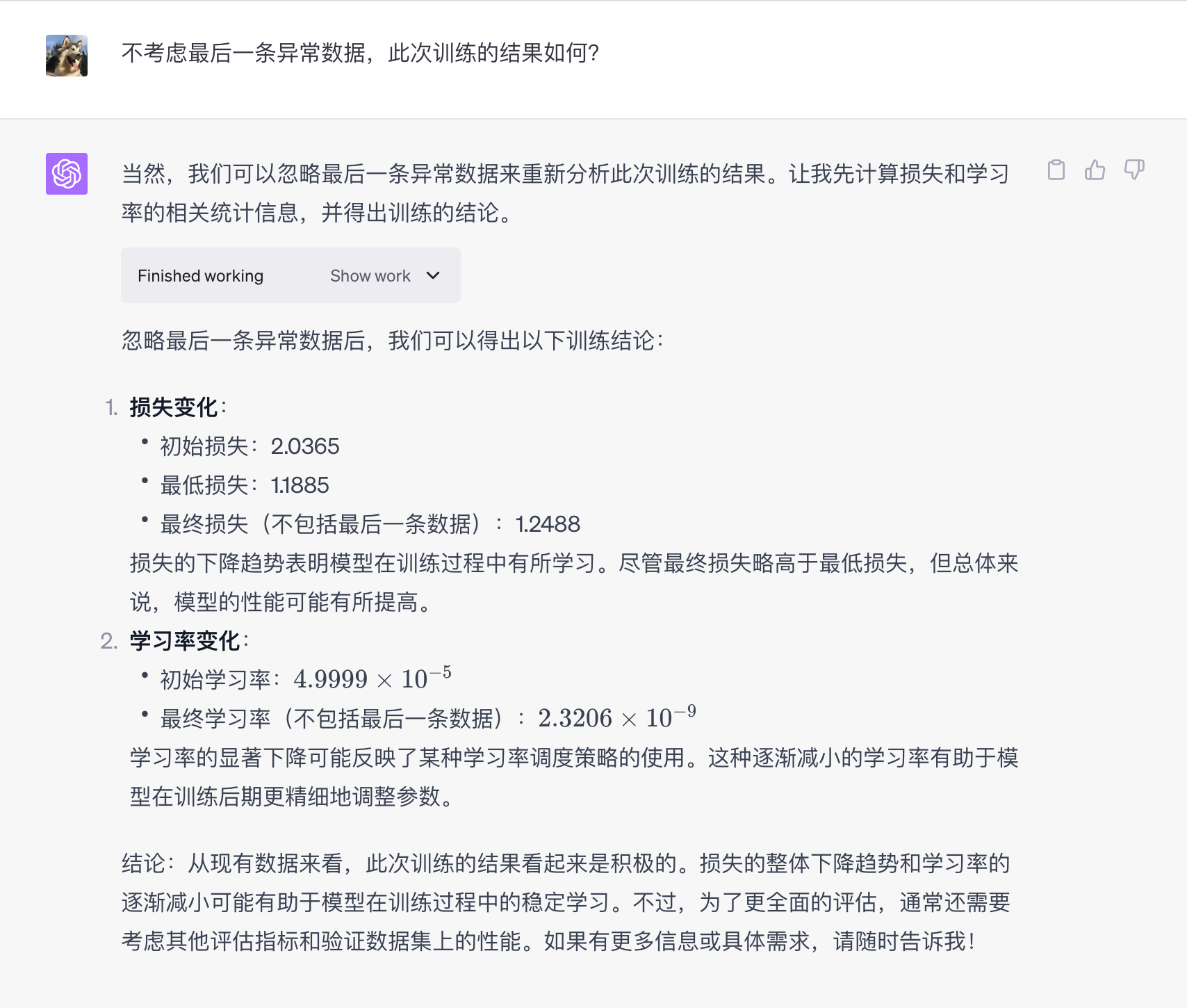

如何判断SFT的效果?

这是一个非常复杂的问题。但是可以尝试将您的问题对照场景拆解后让AI辅助解答。参考下图。然后你可以继续发送你的具体问题,让AI进行逐步分析。

暂无内容

老师你好,我希望能用一个openwrt路由器实现IPv4和IPv6的桥接,请问我该如何实现?我尝试了直接新增dhcpv6的接口,但是效果不甚理想(无法成功获取公网的ipv6,但是直连上级路由的其他设备是可以获取公网的ipv6地)

你好

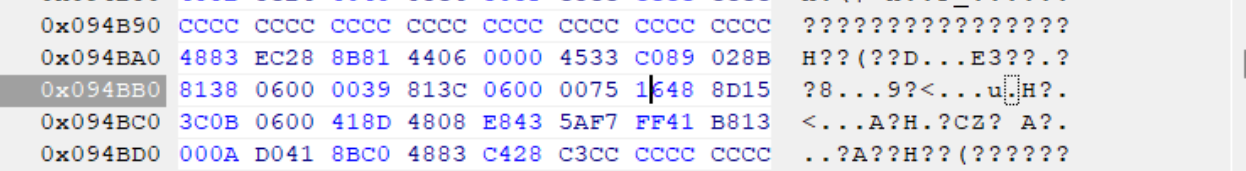

,为什么我这里是0039 813C 0600 0075 16xx xx xx,只有前6组是相同的,博客中要前8位相同,这个不同能不能照着修改呢?我系统版本是Win1124H2

大神你好,win11专业版24h2最新版26100.2033,文件如何修改?谢谢

win11专业版24h2最新版26100.2033,Windows Feature Experience Pack 1000.26100.23.0。C:\Windows\System32\termsrv.dll系统自带的这个文件,39 81 3C 06 00 00 0F 85 XX XX XX XX 替换为 B8 00 01 00 00 89 81 38 06 00 00 90。仍然无法远程连接。原来是win11 21h2系统,是可以远程链接的。共享1个主机,2个显示器,2套键鼠,各自独立操作 各自不同的账号,不同的桌面环境。

博主,win11专业版24h2最新版,C:\Windows\System32\termsrv.dll系统自带的这个文件,找不到应该修改哪个字段。我的微信:一三五73二五九五00,谢谢